What If AI Content Came With Built-In Proof of Origin?

Encypher embeds invisible metadata to make AI content verifiable, tamper-proof, and trusted by default.

By: Erik Svilich, Founder & CEO | Encypher | C2PA | CAI

As generative AI reshapes how we create and share information, a major problem has emerged:

There's still no reliable way to prove whether a piece of text was generated by AI.

Most detection tools guess based on writing patterns. But guesswork leads to false positives — and real harm.

The Problem: AI Detectors Keep Getting It Wrong

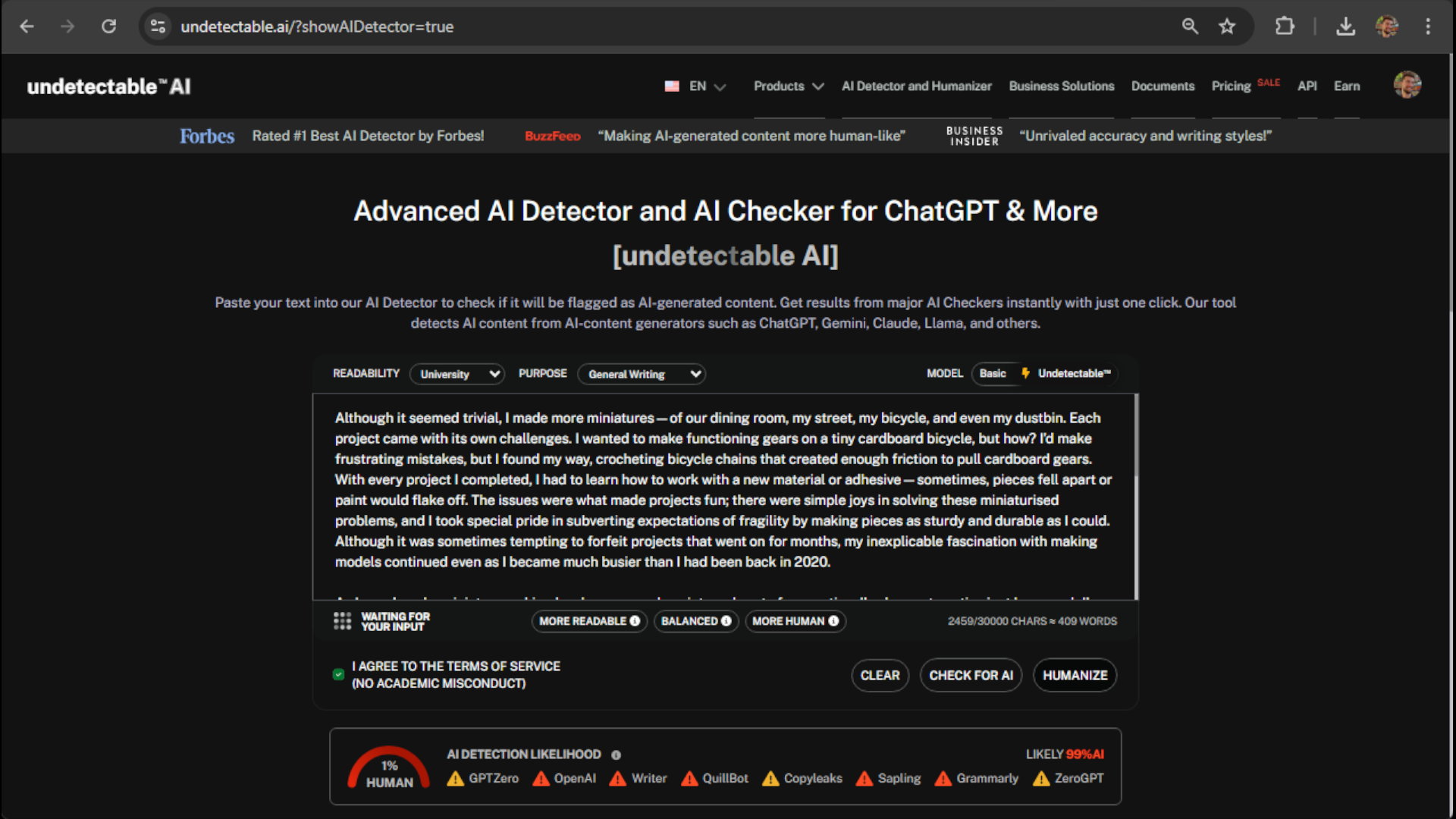

Detection tools like GPTZero and UndetectableAI analyze sentence structure, repetition, or "burstiness," hoping to flag machine-written content. But their results are often inconsistent — and dangerously inaccurate.

📚 Case in point: An essay by a real student, published on the official Johns Hopkins admissions blog, was submitted to multiple AI detectors.

🔎 The result?

GPTZero and UndetectableAI flagged it as "99% AI-generated."

✅ But this wasn't AI. It was a real student's personal story.

These false positives aren't just errors — they have consequences.

Students, job seekers, and creators risk being accused of dishonesty based on flawed algorithms.

Even OpenAI shut down its own detection tool, citing low accuracy.

The Solution: Encypher

What if we didn't need to guess?

What if AI-generated content came with built-in proof of origin?

That's what we built.

Encypher embeds invisible, cryptographically verifiable metadata directly into AI-generated text at the moment of creation.

Like a digital fingerprint baked into the content itself, it allows anyone to verify:

- ✔️ Was this generated by AI?

- 🧠 Which model produced it?

- ⏰ When was it created?

- 🧩 Why was it generated? (optional metadata like user, purpose, etc.)

It's tamper-proof, invisible to the human eye, and verifiable in milliseconds.

How It Works

Encypher is a lightweight Python package designed for developers.

It works with OpenAI, Anthropic, Gemini, or any custom LLM.

Just a few lines of code, and you're done.

It's:

- ✅ Open-source (AGPL-3.0)

- 🔁 Dual-licensed for commercial integration

- 🔐 Offline-verifiable (no server required)

📦 A publisher, for example, can use Encypher to tag every AI-assisted article with verifiable metadata — protecting their reputation while aligning with future compliance needs.

Why It Matters Right Now

As governments move toward enforcing AI transparency laws (e.g., the EU AI Act), verifying the source of content will be critical for compliance and trust.

We're not trying to beat flawed detectors.

We're building a better system — one based on truth from the source, not guesswork after the fact.

Help Us Build the Open Standard for Verifiable AI Content

If you're working on:

- 🧭 Responsible AI

- 📄 Content authenticity

- ⚖️ AI governance or compliance

- 🛡️ Misinformation prevention

...we'd love to collaborate.

🔗 encypherai.com

📁 github.com/encypherai/encypher-ai

Final Thoughts

AI is rewriting the future of content. But without trust, it doesn't scale.

Let's build that trust — from the source.

👋 Developers: you can implement Encypher in under 5 minutes.

🏢 Leaders: let's talk about aligning with your AI transparency goals.

💬 What use cases do you see?

🔁 If this resonates, consider sharing it.

⭐ Or give us a star on GitHub if you believe in the mission.

#EthicalAI #AITrust #AIProvenance #ContentAuthentication #OpenSource #MachineLearning #DigitalIntegrity #AIGovernance #TechInnovation #ResponsibleAI